Morphology and Syntax

Although our presentation of our theory of syntax has hopped from one side of the representational/derivational divide to the other, our (often implicit) conception of how morphology and syntax interact has remained constant. The job of syntax is to define a set of well formed structures, whether by assembling them out of smaller pieces (i.e. derivationally) or by imposing constraints that well-formed structures must satisfy (i.e. representationally). Given such a structure, we identify the spans it contains, and ship them off to the morphology to interpret. This is a quintessentially post-syntactic perspective on morphology, and is relatively standard in the transformational tradition. It sort of stands to reason that if your syntax is manipulating tiny pieces, agglomerations of which represent words, then you should wait until the dust of manipulating these things has settled before you interpret them as parts of words.

In this post my aim is to show you that what we have been thinking of as post-syntactic morphology is really pre-syntactic morphology. And of course, that this pre-syntactic morphology is also really post-syntactic. And therefore that the question of whether morphology first constructs words, and then syntax puts them together, or whether syntax first puts things together, and then morphology interprets parts of these things as words, is ill-formed in this particular case: these describe not opposing theories, but mutually compatible perspectives on the same theory. This question, in our current setting, is like the question of which face of the the necker cube (figure 1) is its front.

Figure 1: A necker cube (by BenFrantzDale - Own work, CC BY-SA 3.0, https://commons.wikimedia.org/w/index.php?curid=2040007)

The correct answer in the case of the cube is: both, and neither. The cube is just a figure, and the same figure can be viewed in multiple ways. Likewise, syntax and morphology just are a way of factoring the description of well-formed structures, and the same factorization can be viewed in multiple ways (either syntax or morphology first). I want to emphasize again that this ‘hot take’ on the morphology-syntax interface applies to the theory we have been developing (which I think is exactly the theory of minimalism, modulo any religious mumbo jumbo). It may or may not apply to other theories of syntax! It should, I think, make us skeptical about the cognitive content of many issues in the field: I think there is a real substantive discussion to be had that can be couched in these terms (as I have tried to suggest in my own work), but we have yet to identify what the real issue is that the debate about whether morphology is post-syntactic or pre-syntactic is obfuscating. I think many discussions in linguistics are like this.

Morphology forwards and backwards

In the last post we spoke about the morphological equations being extensional specifications of what a theory of morphology must do. A morphological equation is of the form \(w = \ell_{1} \oplus \dots \oplus \ell_{k}\), where on the left hand side is a word, and on the right hand side is a span, given as a sequence of lexical items.

We, in a post-syntactic morphological daze, have been interpreting these equations directionally, reading them from right to left as telling us how to interpret spans as words. However, we could just as well read them from left to right, as telling us how to interpret words as spans. This is a pre-syntactic perspective on morphology.

In order to see what this perspective looks like for syntax, we ask:

how could syntax start from the outputs of morphology?

As the outputs of morphology (recall, we are reading equations from left to right) are spans, we can sharpen this question to:

how can syntax combine spans?

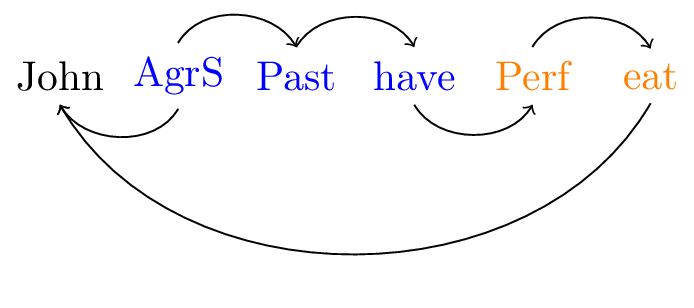

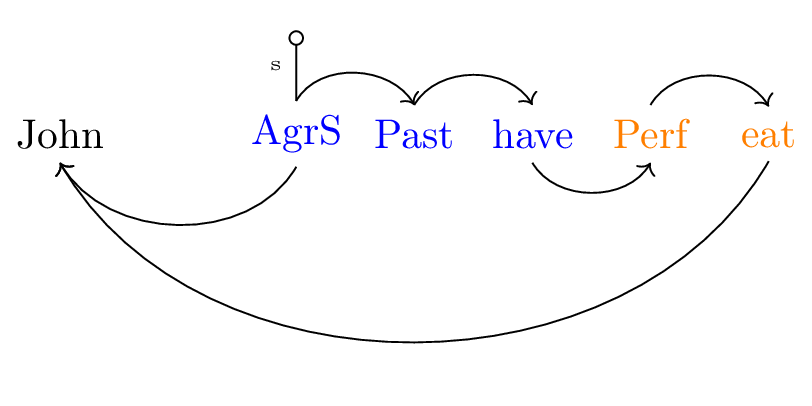

Consider as an example the dependency structure below, with attendant lexical items.

Figure 2: A well-formed dep struct

| \(\textsf{john}\mathrel{::}d.k\) | \(\textsf{have}\textrm{::}\bullet x.m\) | \(\textsf{eat}\textrm{::}\bullet d.v\) |

| \(\textsf{Past}\mathrel{::}\underline{\bullet m}.t\) | \(\textsf{Perf}\mathrel{::}\underline{\bullet v}.x\) | |

| \(\textsf{AgrS}\mathrel{::}\underline{\bullet t}.k\bullet.s\) | ||

| \(\textit{John} = \textsf{john}\) | \(\textit{had} = \textsf{AgrS}\oplus\textsf{Past}\oplus\textsf{have}\) | \(\textit{eaten} = \textsf{Perf}\oplus\textsf{eat}\) |

We know how to construct this dependency structure from the lexical items in figure 1. This leads to viewing the morphological equations as telling us how to interpret the things we’ve built as words, and thus to a post-syntactic perspective on morphology. If we want to explore the pre-syntactic perspective, we need to start doing syntax not with individual lexical items, but with the spans on the right hand sides of the morphological equations, as shown below for the spans for the words John, had, and eaten.

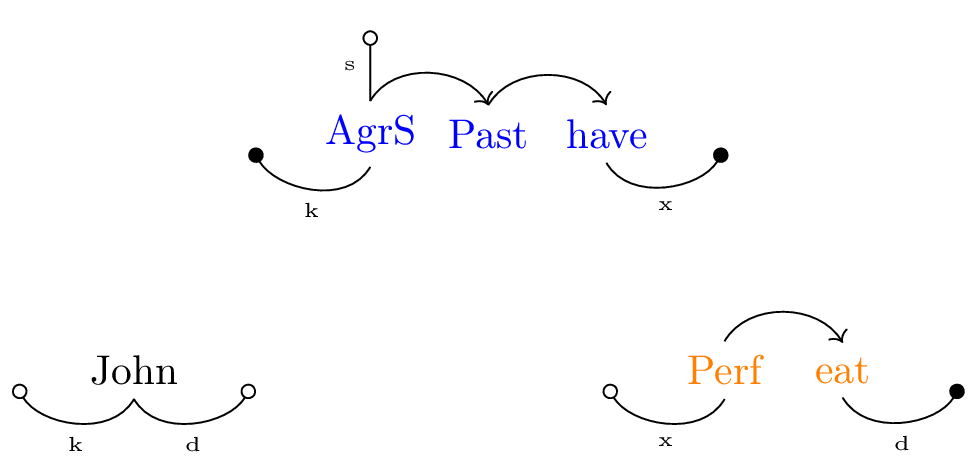

Figure 3: Spans for 2

These spans look a little different from what we are used to seeing,

but this is because we will be using them differently - now that the

output of morphology is the input to syntax. Consider first the

(trivial) span for John. The lexical item john has two features

k and d, these features are of negative polarity. The span for

John has two edges connected to it, one labeled k the other d,

both ending with open circles. The open circles indicate that these

are negative features. The edges are not connected to anything yet,

because the features they represent have not been checked. These

unconnected, dangling, or incomplete edges will need to be completed

by connecting them to other incomplete edges; this act represents

feature checking. There is no significance to the direction in which

dangling edges hang - they’re just out there dangling.

Consider now the span for eaten. This span is composed out of the

lexical items eat and Perf, both of which have two features: •v

and x for Perf, and •d and v for eat. The span has three

edges, two of which are unconnected - these latter are labeled with

x and d, the x edge ends in an open circle because the unchecked

feature it represents is negative, and the d edge ends in a closed

circle because the unchecked feature it represents (the •d feature

on eat) is positive. The edge from Perf to eat represents both

the •v feature on Perf and the v feature on eat. This edge

connects Perf to eat because the •v feature on Perf checks the

v feature on eat. I did not write a feature name above this edge

because the associated features have already been checked.

Finally, the span for has is composed of three lexical items:

AgrS, Past, and have. AgrS has three syntactic features,

•t, k•, and s. The first two are positive, the last negative.

Of these three features, only the first is checked in the span of

has; it is represented by the arrow from AgrS to Past. The

unchecked positive k• feature is represented by the dangling edge

ending in a solid circle labeled k, and the unchecked negative s

feature is represented by the dangling edge ending in an open circle

labeled s.

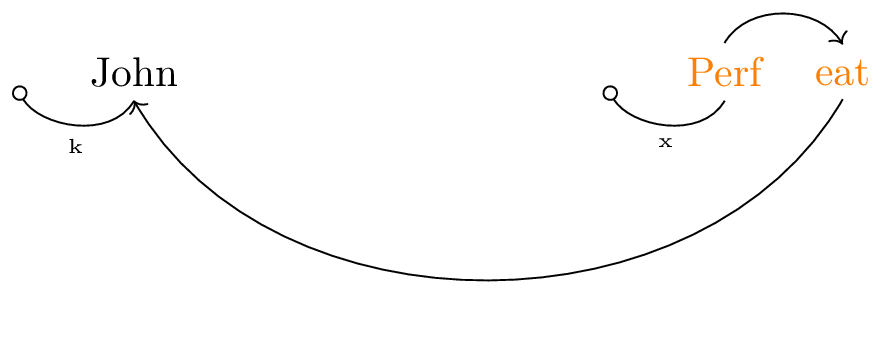

Starting out with spans with dangling edges, we need to connect them to one another. Just as with ‘normal’ lexical items, the dangling edges of a span should be connected in a particular order: the lowest dangling edge must be dealt with first. Multiple dangling edges on a single node in a span are to be checked in the order in which the features they correspond to occur on the lexical item that node represents. So in John, for example, the dangling d edge must be completed before the dangling k edge.

Let us work out how these spans can be assembled. Eyeballing the three spans above, the lowest features of each are:

- the open d edge of the node John in the span John

- the closed d edge of the node eat in the span eaten

- the closed x edge of the node have in the span had

Of these three possibilities, only the d edges match - they have the same label and opposite polarity. We connect John and eaten together via their dangling d edges. This results in the dependency structure below.

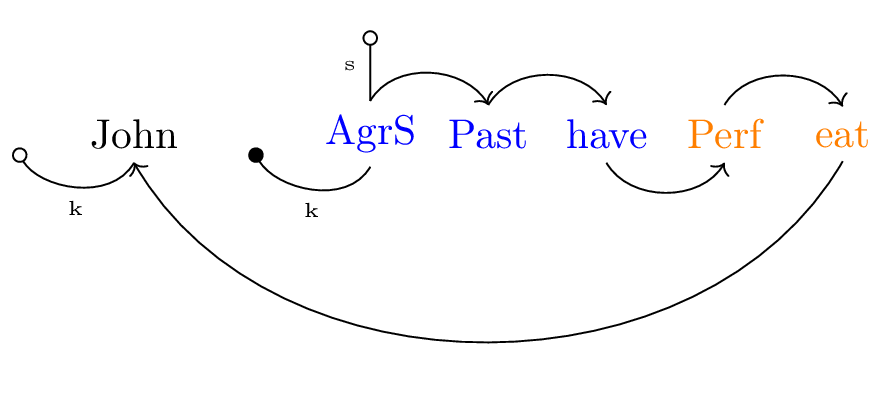

The resulting dependency structure has two dangling edges, one labeled x and the other k. Both are from different spans, and so may be checked in principle in any order. In fact, however, the x edge is on the span which is (or which contains) the head of the dependency structure Perf, and so only it may be targeted by an external merge step. At this point, the lowest dangling on the span for had matches this x edge. We connect them together.

The resulting dependency structure has two dangling edges, one labeled x and the other k. Both are from different spans, and so may be checked in principle in any order. In fact, however, the x edge is on the span which is (or which contains) the head of the dependency structure Perf, and so only it may be targeted by an external merge step. At this point, the lowest dangling on the span for had matches this x edge. We connect them together.

In the newly assembled structure in figure 5, there are three dangling edges. The two labeled k belong to different spans, and thus may be competed in any order. The dangling edges k and s originating on AgrS belong to the same span, and must be completed in a particular order: k must be completed before s. Accordingly, the matching dangling k edges (on John and AgrS) may be connected.

In the newly assembled structure in figure 5, there are three dangling edges. The two labeled k belong to different spans, and thus may be competed in any order. The dangling edges k and s originating on AgrS belong to the same span, and must be completed in a particular order: k must be completed before s. Accordingly, the matching dangling k edges (on John and AgrS) may be connected.

Figure 6: Assembling spans (III)

In the dependency structure above there remains one dangling span,

labeled s. This corresponds to the one unchecked negative feature

s on the lexical item AgrS. We have not been representing this

last negative feature on our previous dependency structures (at least,

not since I made the switch from the ‘boxes’ version), but it is

common in the dependency literature to have an arrow from heaven

pointing at the head of a dependency structure. So, we’re done.

Assembling spans is exactly the same as assembling lexical items, only, well, bigger. Could we maybe represent spans as lexical items? No. A span has many more internal ‘positions’ for merged and moved expressions to end up in; a lexical item, if it triggers movement of something, will always end up to the right of that thing. But the intuition that spans are very much like lexical items is more right than not. Chomsky, when originally publishing the minimalist program, presciently came up with a morphology first system very similar to the one here, which has been called ‘strict lexicalism.’ In Chomsky’s system, words (like had) were inserted fully inflected into a V node, and then had features that made them head move to various positions. The features that fully inflected words had were essentially records of what nodes they were made up of. If you squint (and you don’t have to do much squinting), you can see the same idea that we have presented here, in a nascent form.

Homework

Please choose some construction in some language (e.g. your favorite construction in your favorite language) to analyze in the manner of this course. Please start out with ‘reasonable’ (in your opinion) whole-word dependency structures, and, using lexical decomposition, see what kind of analysis you arrive at. Note that the way we proceeded in this course was to start small and to gradually work our way up.