Monotonicity and reasoning with determiners

Last time we saw that natural language determiners can be classified according to the information they require to determine whether their noun argument is appropriately related to their predicate argument, and that this classification seemed to have some broader linguistic reality.

This time, we will take a closer look at the partial order relation (\(\le\)) that is associated with any boolean domain. Recall that in the domain of truth values, the boolean order coincides with implication (with \(b \le c\) holding just in case \(b\) implies \(c\)), and that in the domain of sets, the boolean order coincides with the subset relation (with \(P \le Q\) holding just in case \(P\) is a subset of (or is equal to) \(Q\)). Consider the following valid inferences:

- Every student is running quickly \(\vdash\) Every student is running

- Some student is jumping \(\vdash\) Some student is moving

- At least 4 students are laughing \(\vdash\) At least 4 students are not silent

In each of these inferences, we have the same determiner and nominal argument on the left as on the right: \[Det\ N\ P \vdash Det\ N\ Q\] Furthermore, the predicate of the antecedent denotes a subset of the predicate of the consequent: \(P \subseteq Q\).

- run quickly \(\subseteq\) run

- jump \(\subseteq\) move

- laugh \(\subseteq\) not silent

To see this consider that if something runs quickly, than it necessarily also runs. Similarly, if something jumps, it must necessarily move. And finally, if something laughs, it must not be silent (ignoring the artificial silent laughing you learn how to do in kindergarten).

Let us revisit our semantic explanation of inferences. An inference

\(P_{1},\ldots,P_{n} \vdash Q\) is predicted by our semantic theory

just in case whenever all the antecedent sentences are true, the

consequent is true, in other words, just in case the conjunction of

the antecedents imply the consequent: \(P_{1} \wedge \dots \wedge

P_{n} \rightarrow Q\). As noted above, implication in the domain of

truth values is the boolean order of that domain. So an inference is

predicted to hold whenever the boolean meet of the antecedents are

less than or equal to the consequent. Returning to our entailments

above, they have but a single antecedent, and so are predicted to hold

if the antecedent is less than or equal to the consequent. This

happens in the above cases whenever the antecedent predicate is itself

less than or equal to the consequent predicate: \[\text{if }P \le Q

\text{ then } Det\ N\ P \le Det\ N\ Q\] This property is called

(upward) monotonicity. We can state it generally in terms of

functions as follows: increasing the inputs increases the

outputs:1 \[\text{if }x \le y\text{ then }f\ x \le f

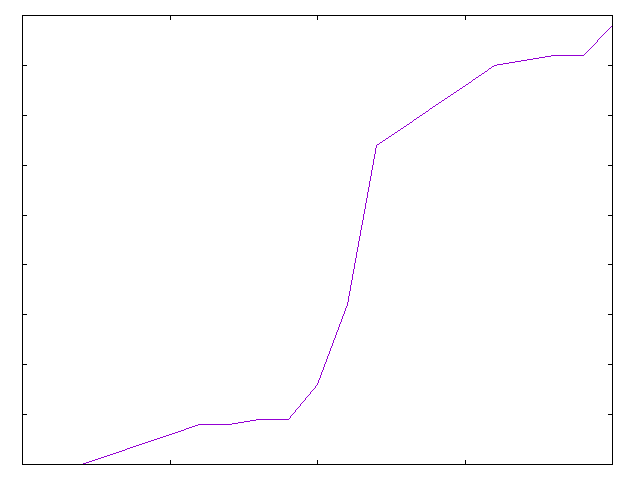

y\] It is easy to visualize upward monotonicity by viewing the graph

of a function. (On real numbers, a (continuous) function is upward

monotone if its first derivative is never negative.)

Figure 1: An upwards monotone function

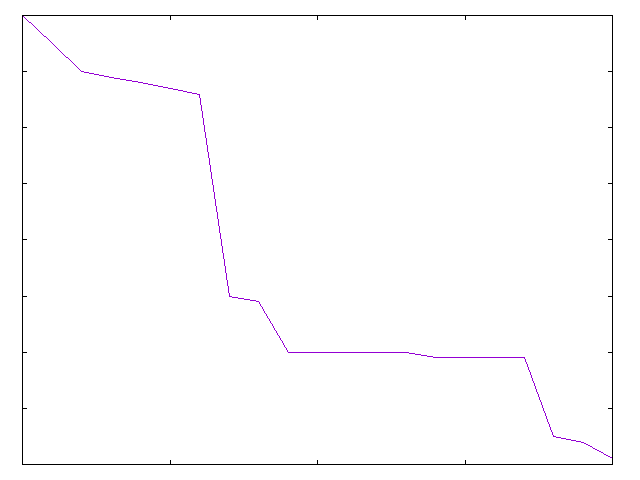

If a function is upwards monotone, we sometimes say that it preserves the order (of its inputs). Similarly, a downwards monotone function is one that reverses the order on its arguments (note that the relation between \(f x\) and \(f y\) has flipped): \[\text{if }x \le y\text{ then }f\ x\ge f\ y\]

It is easiest to visualize a downwards monotone function via its graph (on real numbers, the first derivative of a (continuous) downwards monotone function is never positive.)

Figure 2: An downwards monotone function

Upward and downward monotone functions are sometimes called increasing and decreasing functions, respectively.

Monotonicity properties are definable whenever a function has an ordered domain and an ordered codomain (i.e. when the inputs are ordered and the outputs are ordered), which is always the case when domain and codomain are both lattices.

Now let us return to language, and do some empirical (classificatory) work! We have seen that the denotations of both every and some are increasing (upward monotone) on their second argument. This is to say, for any noun phrase denotation \(N\), we have that if \(P \le Q\), then

- \(every\ N\ P \le every\ N\ Q\)

- \(some\ N\ P \le some\ N\ Q\)

The same holds true for (infinitely) many other determiners, like at least 4.

Let us look at the first argument of every and some:

- every tall boy laughs \(\not\vdash\) every boy laughs

- some tall boy laughs \(\vdash\) some boy laughs

Here we see that some is, but every is not, increasing on its

first argument. This means, to be precise, that the function \(some

P\) is less than or equal to the function \(some\ Q\) whenever \(P \le

Q\). Recall that a function \(f\) is less than or equal to a function

\(g\) if the output of \(f\) on any input is always less than or equal

to the output of \(g\) on that same input. So we are saying that for

any predicate argument \(S\), \(some\ P\ S\le some\ Q\ S\) whenever

\(P \le Q\). This is of course an empirical claim, namely, that for

any predicate expression S, and any two nominal expressions P and

Q, if being P always entails being Q, then some P S entails

some Q S. We cannot of course prove that this claim holds

empirically, as we would have to consider an infinite amount of data.

However, all the data we care to check verifies this, and we can show

that, given our truth conditions for some, we predict this to

obtain:

- assume \(P \subseteq Q\)

- if \(some\ P\ S = \textbf{True}\) then \(\left| P\cap S\right| \not= 0\)

- if \(\left| P \cap S\right| \not= 0\), then \(\left| Q \cap S\right| \not= 0\)

- but then \(some\ Q\ S = \textbf{True}\)

As this conclusion doesn’t depend on the predicate argument \(S\), it

holds for any predicate, and so we have shown that \(some\ P\le some

Q\) under the assumption that \(P \le Q\).

Although every is not increasing on its first argument, it is decreasing:

- every boy laughs \(\vdash\) every tall boy laughs

here we assume that \(tall\ boy \le boy\), which is intuitive: every tall boy is also a boy. Our denotation for every also predicts this:

- assume \(P \subseteq Q\)

- if \(every\ Q\ S = \textbf{True}\) then \(\left|Q - S\right| = 0\)

- if \(\left| Q - S\right| = 0\), then \(\left| P - S\right| = 0\)

- but then \(every\ P\ S =\textbf{True}\)

We can continue our classificatory task:

- no tall boy laughs \(\not\vdash\) no boy laughs

- no boy laughs \(\vdash\) no tall boy laughs

So no is, like every, decreasing on its first argument.

- no boy laughs loudly \(\not\vdash\) no boy laughs

- no boy laughs \(\vdash\) no boy laughs loudly

And no is also decreasing on its second argument. Our denotation for no also predicts this (but I will not write this out here).

Not every determiner is monotonic (either upwards or downwards). For example, most is neither upward nor downward monotonic in its first argument:

- most tall boys laugh \(\not\vdash\) most boys laugh

This inference fails in a model where all tall boys laugh, most boys aren’t tall, and no non-tall boys laugh.

- most boys laugh \(\not\vdash\) most tall boys laugh

This inference fails in a model where no tall boys laugh, all non-tall boys laugh, and most boys are not tall.

However, most is increasing on its second argument:

- most boys laugh loudly \(\vdash\) most boys laugh

We can summarize this behaviour in a table:

| Determiner | First Argument | Second Argument |

|---|---|---|

| every | - | + |

| some | + | + |

| no | - | - |

| most | xxx | + |

We write \(+\) if the determiner in question is increasing in a particular argument, \(-\) if decreasing, and xxx if neither.

Note that we can classify not only determiners in this way, but any function over lattices; this includes the boolean operations of negation, conjunction and disjunction! Observe that if \(f \le g\) then \(\neg g \le \neg f\): let \(x\) be any valid input, and consider that \(f\ x \le g\ x\). This means that, by definition, \(f\ x = f\ x \wedge g\ x\) and \(g\ x = f\ x \vee g\ x\). We want to show that \((\neg g)\ x = \neg (g\ x) \le \neg (f\ x) = (\neg f)\ x\). This will be true if \(\neg (g\ x) = \neg (g\ x) \wedge \neg (f\ x)\) and if \(\neg (f\ x) = \neg (g\ x) \vee \neg (f\ x)\). By de Morgan’s laws, we have that \(\neg(g\ x) \wedge \neg(f\ x) = \neg (g\ x \vee f\ x ) = \neg (g\ x)\). Similarly, we have that \(\neg (g\ x) \vee \neg (f\ x) = \neg (g\ x \wedge f\ x) = \neg (f\ x)\). Thus boolean negation is decreasing in its only argument!

We can show in a similar way that conjunction and disjunction are increasing in both arguments.

Consider now a sentence like the following (with ample negations, conjunctions and disjunctions):

- No non-student either doesn’t laugh or both cries and doesn’t jump

(This has the basic shape: \(no\ \neg A\ (\neg P \vee (Q \wedge \neg R))\)) Assume furthermore that we know that \(Q' \subseteq Q\); perhaps \(Q'\) is cries loudly. How can we determine whether the previous sentence is predicted to entail the following:

- No non-student either doesn’t laugh or both cries loudly and doesn’t jump

We can reason as follows:

no is decreasing on its second argument, and so the entailment should hold if \(\neg P \vee (Q'\wedge \neg R)\subseteq \neg P \vee (Q \wedge \neg R)\). Disjunction is increasing on both arguments, so this should hold if \(Q'\wedge \neg R\subseteq Q\wedge \neg R\). Finally, Conjunction is also increasing on both arguments, and so this should hold just in case \(Q'\subseteq Q\).

Note that this reasoning does not depend at all on the denotations we have assigned to the expressions, only on their monotonicity properties! This sort of reasoning allows us to ask of any part \(X\) of a sentence \(\phi\), whether \(X\) occurs in an increasing (or decreasing, or neither) portion of that sentence. If an expression occurs in an increasing position, I will say it occurs positively, and if it occurs in a decreasing position, I will say it occurs negatively.

We can give a more precise characterization of when an expression occurs positively in another as follows.

- \(A\) occurs positively in itself

- If \(F\) is increasing, then

- everything that occurs positively in \(F\) or in \(X\) occurs positively in \(F\ X\)

- everything that occurs negatively in \(F\) or in \(X\) occurs negatively in \(F\ X\)

- If \(F\) is decreasing, then

- everything that occurs positively in \(F\) or negatively in \(X\) occurs positively in \(F\ X\)

- everything that occurs negatively in \(F\) or positively in \(X\) occurs negatively in \(F\ X\)

We can now formulate a pair of general monotonicity inference rules. I will use \(\phi[A]\) as a convenient way of talking about an expression \(\phi\) which contains an \(A\) somewhere. Then I will write \(\phi[B]\) to indicate that I have replaced that particular \(A\) in \(\phi\) with \(B\). So if \(\phi[cries]\) is our first sentence above, then \(\phi[cries\ loudly]\) was the sentence we asked whether was entailed.

- if \(A\) occurs positively in \(\phi[A]\), and \(A \le B\), then \(\phi[A]\le\phi[B]\)

- if \(A\) occurs negatively in \(\phi [A]\), and \(A \le B\), then \(\phi [A]\ge\phi [B]\)

-

It is more precise to say that increasing the inputs doesn’t decrease the outputs. This is because all that is required is that \(f(x) \le f(y)\) whenever \(x\le y\), which is also satisfied when \(f(x) = f(y)\). ↩︎